Dashboards

Embedded visibility for on-call teams

Project overview

ETL built for Austin MetroBike transparency

This project implements an ETL pipeline to process historical bike sharing data from Austin (2013–present). The goal is to enable stakeholders to make informed decisions on bike usage patterns, station performance, demand distribution, and vehicle/subscription trends.

The pipeline extracts raw trip data, transforms it to calculate useful metrics (e.g., trips per kiosk, trips per hour, top stations, subscription and bike type analysis), and loads the results into a format suitable for visualization and analysis.

Source datasets are publicly available:

Austin MetroBike Trips

Austin MetroBike Kiosk Locations

Stakeholder requirements

What teams asked for

- Trips by Start Kiosk: Total trips per kiosk aggregated by day of week, month, and year.

- Demand Distribution Over Time: Hourly trips segmented by day of week, month, and year.

- Geospatial Analysis of Stations: Identify and visualize top 20 kiosks by number of trips per day of week and month.

- Trips by Subscription Type: Total trips made by each membership/pass type.

- Trips by Vehicle Type: Total trips by bike type (Classic vs. Electric).

Proposed data warehouse

Blueprints before build

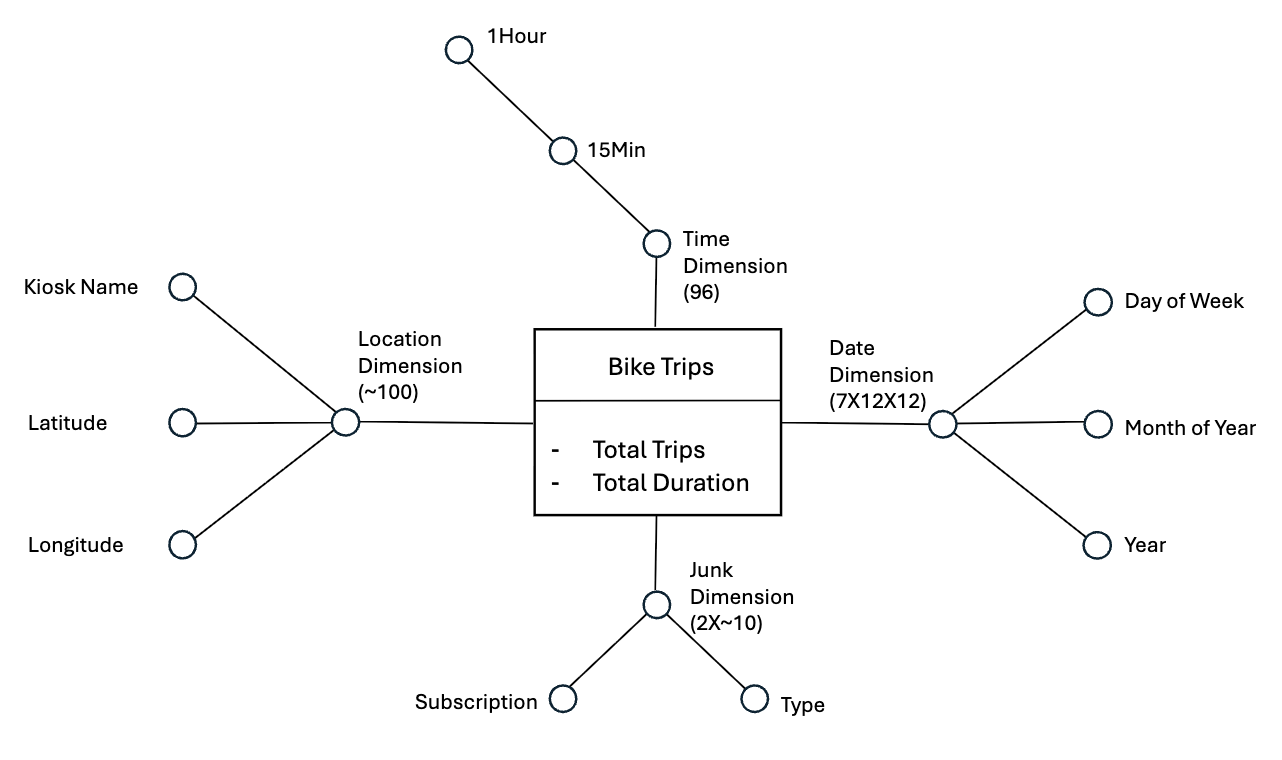

Schematic design

Conceptual design covering fact and dimension entities aligned to trip events, kiosks, vehicles, and subscriptions.

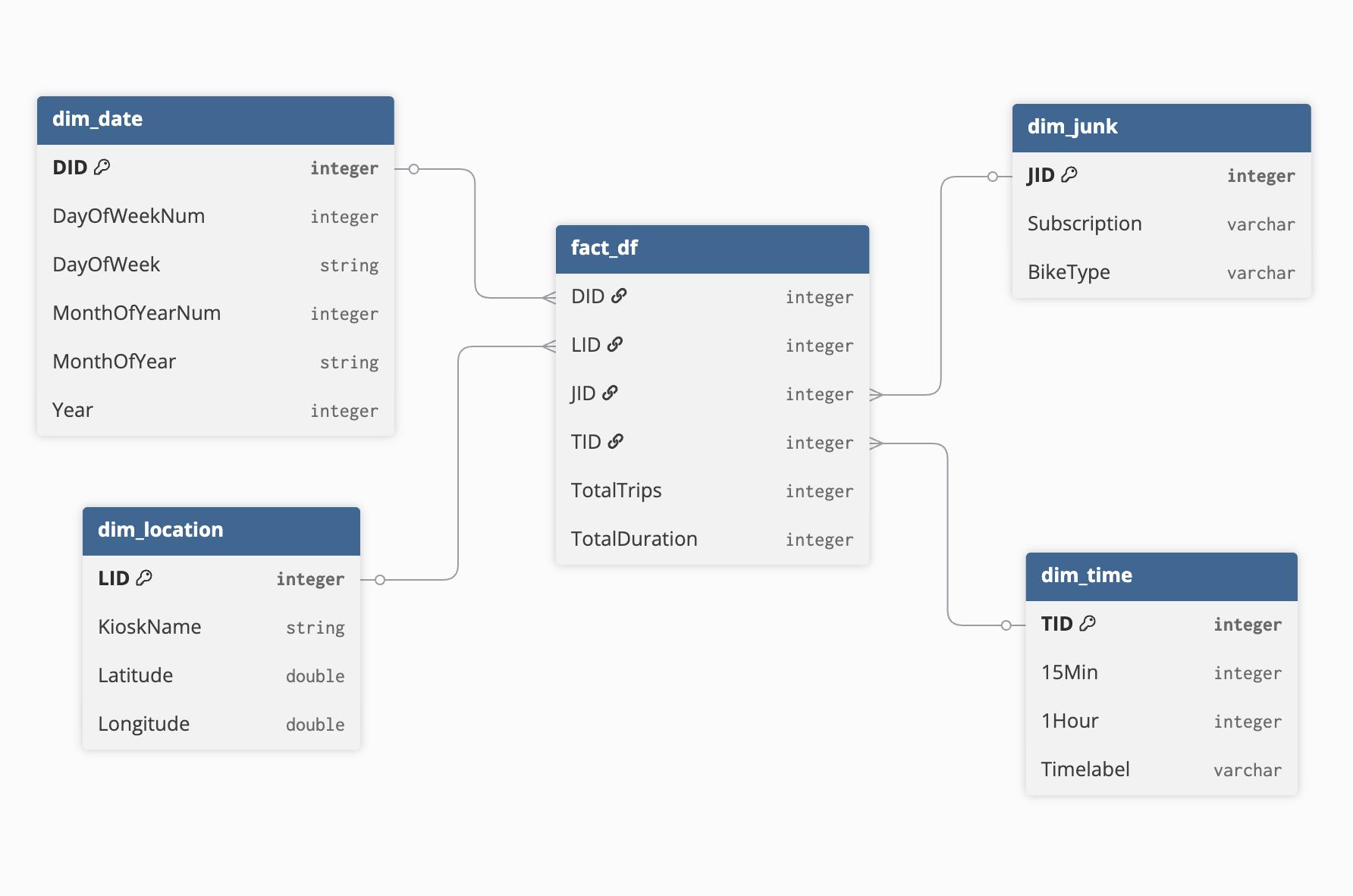

Logical design

Logical schema drawn in DBDiagram to guide implementation and enforce relational integrity.

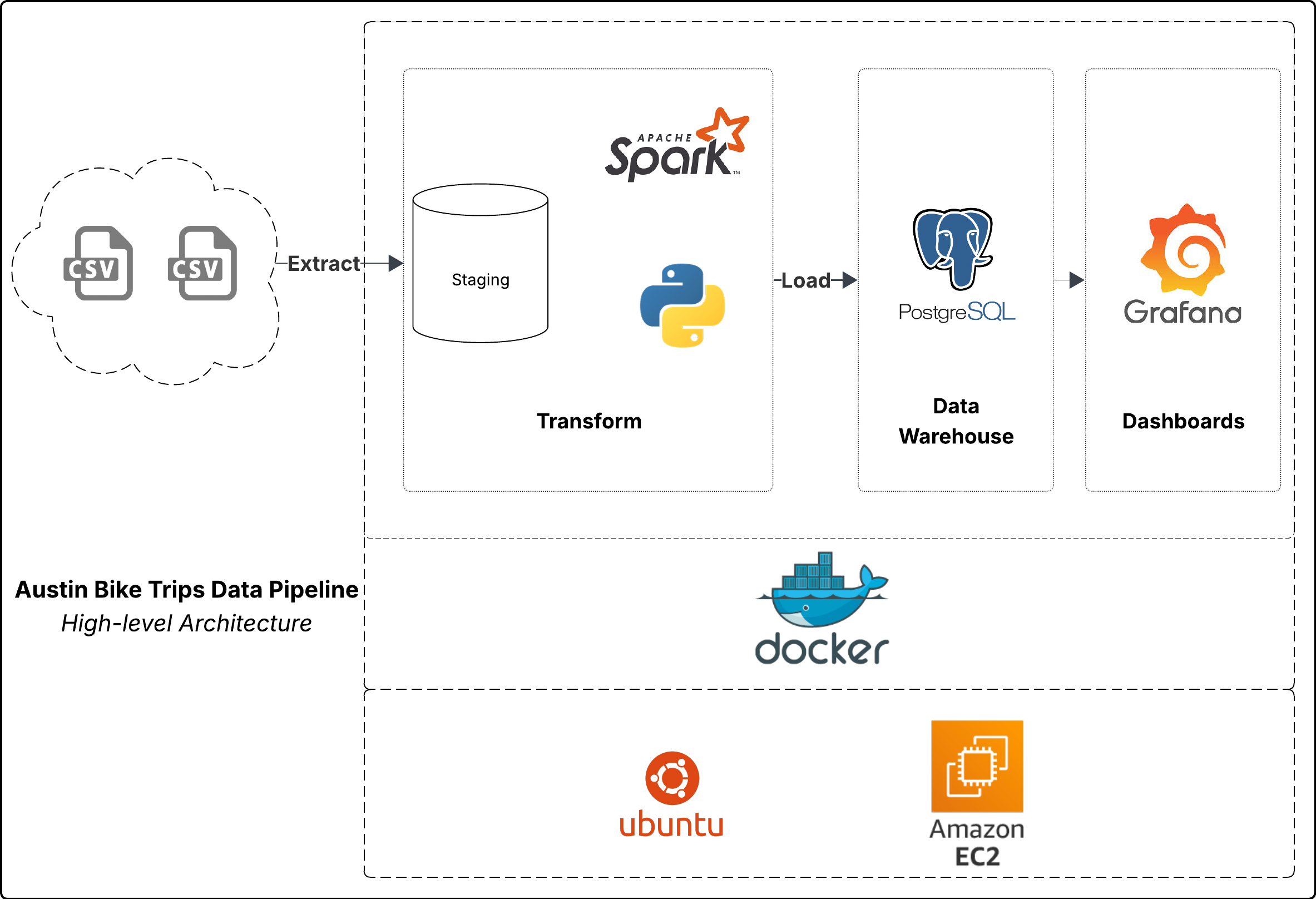

Project architecture

What happened under the hood

Below is a high-level architecture of the ETL pipeline. It highlights the operational flow from raw data pull to dashboards teams use every day.

- Data acquisition: Automated via run_etl.sh; raw datasets land in data/raw.

- ETL processing: etl_main.py with Apache Spark performs feature engineering, dimension extraction, and measure computation.

- Data storage: Fact and dimension tables are written into PostgreSQL.

- Visualization: Grafana dashboards provide interactive filtering and exploration.

- Containerization: Docker orchestrates Spark, PostgreSQL, and Grafana services.

Ready to explore

Let's bring the same rigor to your data story.

Together we can compose pipelines, metrics, and dashboards that make your operations faster, clearer, and more confident.